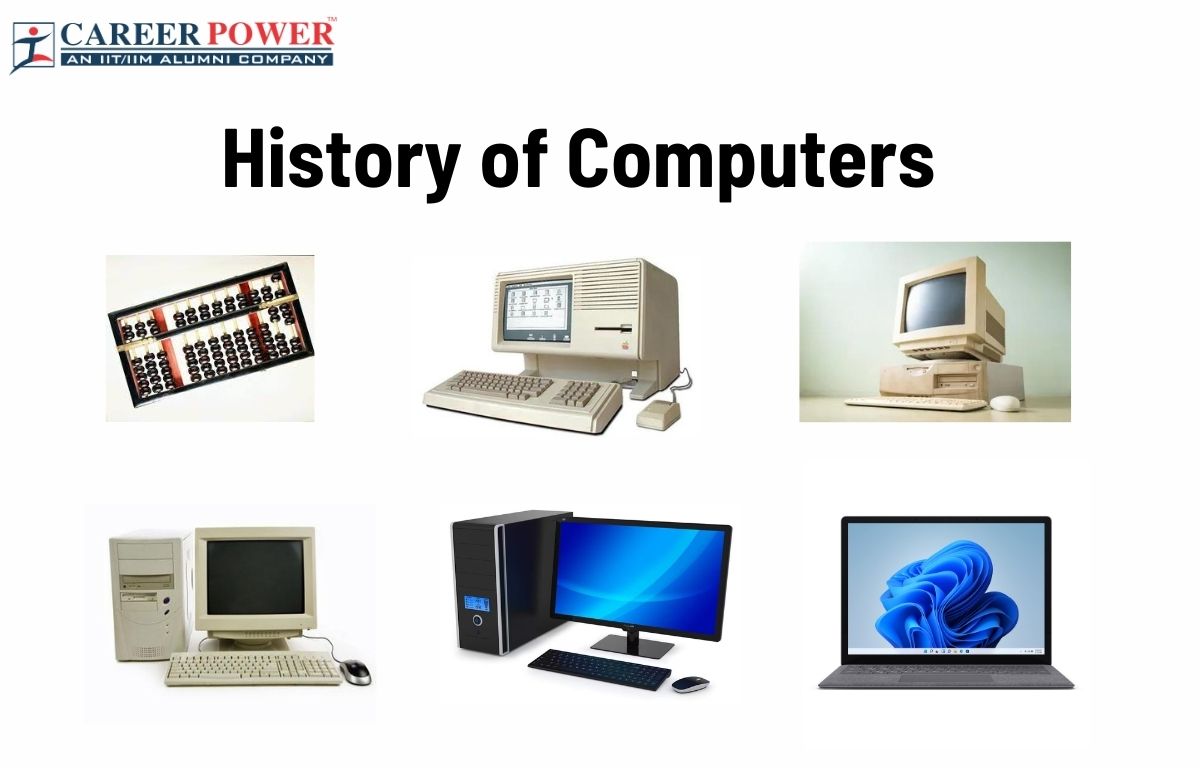

The computer is the most important and essential device in the modern era. Learning and Understanding the history of computers not only unveils the roots of modern computers but also offers profound insights into how human minds and engineering powers have converged to reshape the course of human civilization. In this article, learners will travel back in time to know the early, mid & modern history of computers. The 17th, 19th, mid-19th, early 20th, and 21st centuries, all the developments, and inventions to quick calculations and computing are included below.

History of Computers

The History of computers is a fascinating journey through innovation, ingenuity, and the relentless pursuit of automating complex tasks. From humble beginnings as colossal, room-filling machines to today’s sleek and powerful devices that fit in the palm of our hands, the evolution of computers has been nothing short of revolutionary.

- Ancient Calculating Devices: The history of computers dates back thousands of years to ancient civilizations. Early humans used tools like the abacus, a simple counting device, to perform basic calculations.

- Mechanical Calculators: In the 17th century, Blaise Pascal and later, Gottfried Wilhelm Leibniz, developed mechanical calculators that could perform more complex mathematical operations.

- Charles Babbage’s Analytical Engine: In the 19th century, Charles Babbage conceptualized the Analytical Engine, considered the first programmable computer. Although it was never built during his lifetime, his ideas laid the foundation for modern computing.

- Mechanical and Electromechanical Computers: The early 20th century saw the creation of machines like the Hollerith Tabulating Machine, used for census data processing, and the Z3, the world’s first electromechanical computer.

- ENIAC and the Dawn of Digital Computing: In 1946, the Electronic Numerical Integrator and Computer (ENIAC) was built, marking the transition from electromechanical to digital computers. ENIAC was massive and could perform complex calculations much faster than previous machines.

- Transistors and Miniaturization: The invention of the transistor in the 1950s by Bell Labs revolutionized computing. Transistors replaced bulky vacuum tubes, making computers smaller, more reliable, and energy-efficient.

- Birth of the Personal Computer: The 1970s witnessed the emergence of the personal computer (PC). Machines like the Altair 8800 and the Apple I made computing accessible to individuals.

- Graphical User Interfaces (GUIs) and the Mouse: Xerox’s Alto, and later, the Apple Macintosh, introduced GUIs and the mouse, changing how users interacted with computers.

- The Internet and World Wide Web: In the late 1960s, ARPANET, the precursor to the internet, was created. Tim Berners-Lee’s invention of the World Wide Web in 1991 further transformed how we access and share information.

- Mobile Computing and Smartphones: The development of microprocessors and advancements in miniaturization led to the rise of mobile computing. The introduction of smartphones and tablets revolutionized personal computing.

- Artificial Intelligence and Beyond: Today, computers are not just tools for calculations; they are also used for artificial intelligence, machine learning, and other advanced applications that continue to shape the future.

Early History of Computer

Computer history is a story of visionary ideas, mechanical marvels, and the gradual emergence of modern computing concepts. Our digital age began with simple devices that performed basic calculations, evolving over centuries into sophisticated machines.

In the early history of computers, humans were capable of innovation and problem-solving. In the journey through time, see the generation of computers reflect on the extraordinary progress that continues to make our technological landscape a better place.

17th Century

The first inklings of mechanical calculation can be traced back to ancient civilizations like the Greeks and Chinese, who devised intricate devices such as the abacus. However, the true watershed moment came in the 17th century with the advent of mechanical calculators like Blaise Pascal’s Pascaline and Gottfried Wilhelm Leibniz’s stepped reckoner. These early contraptions could perform addition, subtraction, multiplication, and division with mechanical precision.

18th Century

In 1890s, a US scientist, Herman Hollerith, invented an electromechanical machine designed to assist in summarizing the information stored on punch cards. The machine was named Tabulating Machine. Later this machine went viral as it was easier to use for the complex works of accounting and inventory control. This Tabulating Machine was capable of tabulating statistics and records, and sorting data or information easily. Hollerith company changed to International Business Machine (IBM) in the year 1924.

19th Century

The 19th century witnessed remarkable strides in mechanical computation. Charles Babbage, often hailed as the “father of the computer,” conceived the Analytical Engine in the 1830s. This groundbreaking design incorporated concepts like an arithmetic logic unit, control flow through conditional branching and memory, akin to the elements found in modern computers. Although never fully realized during Babbage’s lifetime, the Analytical Engine laid the conceptual foundation for future computing devices.

Late 19th Century

In the late 19th and early 20th centuries, pioneers like Herman Hollerith made significant strides in data processing. Hollerith’s invention of the punched card system revolutionized information handling, proving invaluable in tasks such as census tabulation. This marked a pivotal moment in the convergence of computation and data management.

Early 20th Century

The mid-20th century witnessed the advent of electronic computing. The ENIAC (Electronic Numerical Integrator and Computer), completed in 1945, is often heralded as the world’s first general-purpose electronic digital computer. ENIAC was a colossal machine, filling an entire room with vacuum tubes and electrical circuits, yet it marked a quantum leap in computational capability.

Subsequent innovations like the EDVAC, UNIVAC, and the development of stored-program architecture by John von Neumann further propelled the evolution of computers. These machines introduced concepts like random-access memory (RAM) and the stored-program concept, laying the groundwork for modern computing architecture.

Mid 20th Century

By the mid-20th century, a new era had dawned, characterized by the proliferation of electronic computers in academia, government, and industry. Each iteration brought advancements in speed, storage capacity, and versatility, solidifying the computer’s role as an indispensable tool for scientific research, business operations, and countless other applications.

Late 20th Century

The late 20th century witnessed the rise of the internet, a global network of interconnected computers that revolutionized communication, commerce, and collaboration. Tim Berners-Lee’s invention of the World Wide Web in 1989 laid the foundation for the modern Internet, enabling users to access and share information seamlessly across the globe.

History of Computers – MCQs with Answers

1. Who is credited with the invention of the first practical programmable computer?

a) John von Neumann

b) Grace Hopper

c) John Atanasoff

d) Steve Wozniak

Answer: a) John von Neumann

2. What was the primary purpose of the ENIAC computer, developed during World War II?

a) Scientific calculations

b) Playing video games

c) Weather forecasting

d) Code-breaking

Answer: d) Code-breaking

3. Which computer introduced the concept of email to the world?

a) ARPANET

b) Xerox Alto

c) Apple Lisa

d) IBM 5100

Answer: a) ARPANET

4. What is the name of the first computer virus discovered in the wild?

a) Trojan Horse

b) ILOVEYOU

c) Creeper

d) Melissa

Answer: c) Creeper

5. Which computer technology paved the way for the development of modern smartphones and tablets?

a) Vacuum tubes

b) Transistors

c) Microprocessors

d) Punch cards

Answer: c) Microprocessors

Generation of Computers 1st, 2nd, 3rd, 4...

Generation of Computers 1st, 2nd, 3rd, 4...

Input Devices of Computer: Definition, F...

Input Devices of Computer: Definition, F...

Computer Languages and it's Types

Computer Languages and it's Types